Check your Ego, Push The Boundary and Reject the Magic. - Clarke's Three Laws Redux

Clarke’s three laws: challenge assumptions, push past what feels possible, and refuse to treat AI as magic. Stay clear-headed and decisive in a moment defined by uncertainty and rapid change.

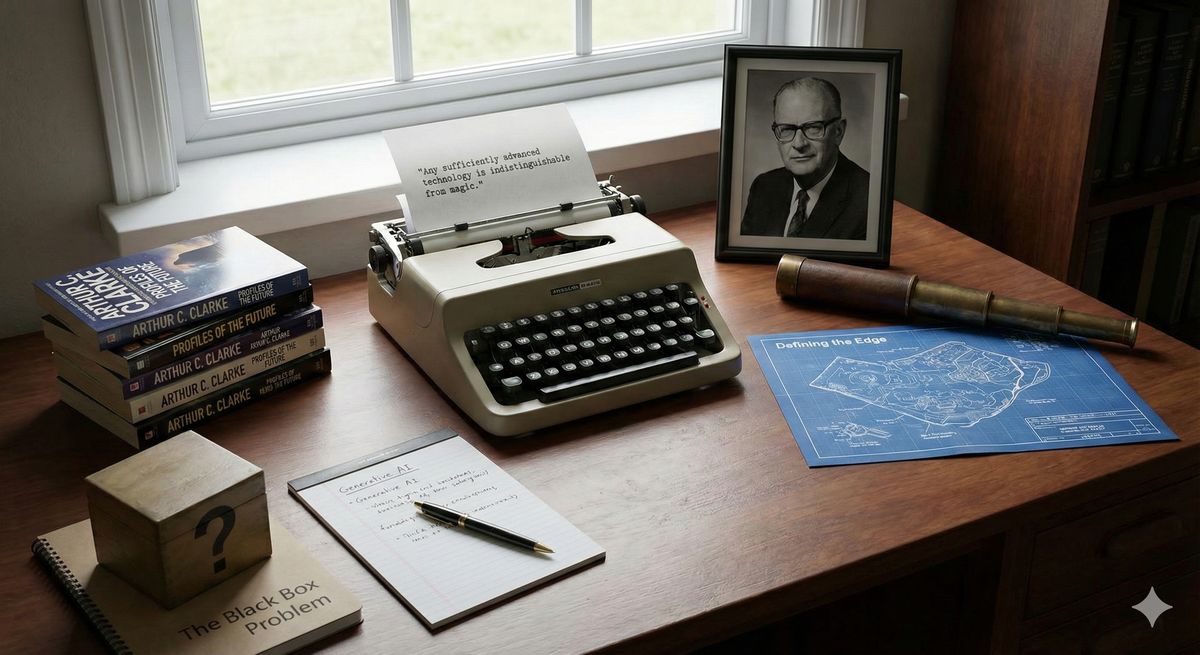

I spent much of my formative years reading the "Big Three" of science fiction: Asimov, Heinlein, and Arthur C. Clarke. While Asimov gave us the ethics of robotics and Heinlein explored the sociology of the future, Clarke was the engineer’s visionary.

Clarke, a British writer, inventor, and futurist, is best known for 2001: A Space Odyssey and Rendezvous with Rama (still a great book). Beyond fiction, he is credited with conceptualising the geostationary communications satellite decades before it became a reality.

Over a number of works, Clarke formulated three "laws" of prediction. While originally intended for scientific discovery, I find them to be a useful framework for navigating the current noise surrounding Artificial Intelligence.

Law 1: The Trap of Experience

"When a distinguished but elderly scientist states that something is possible, he is almost certainly right. When he states that something is impossible, he is very probably wrong."

Clarke introduced this in his 1962 book Profiles of the Future, in a chapter analysing why experts—especially seasoned ones—are so often wrong about the future of technology. He identifies two distinct modes of failure:

The Failure of Nerve

This occurs when an expert has all the relevant facts but cannot accept the conclusion because it appears too radical or violates their intuition. They lack the “nerve” to follow the evidence to its revolutionary endpoint.

Clarke cites Simon Newcomb, a renowned astronomer who "proved" that heavier-than-air flight was impossible mere months before the Wright brothers succeeded. Newcomb had the facts; he simply could not imagine the leap.

Lord Kelvin is often cited as the archetype of the "distinguished but elderly scientist" Clarke describes. Brilliant in his prime, Kelvin made several sweeping claims in his later years:

- "Heavier-than-air flying machines are impossible.” (1895)

- "X-rays will prove to be a hoax.” (1883)

- "Radio has no future.” (1897)

The Failure of Imagination

This is perhaps a more forgiving failure.

A prediction fails not because the expert lacked courage, but because essential scientific principles had not yet been discovered.

Clarke references Auguste Comte, the philosopher who argued that while we could calculate stellar motion, we would never know the chemical composition of stars. Shortly after his death, spectroscopy proved otherwise.

The AI Warning

Those of us with twenty (or more!) years in technology risk becoming today’s "elderly scientists." We have seen hype cycles—SOA, Blockchain, the Metaverse—and it becomes tempting to dismiss the hallucinations of an LLM or the brittle logic of an agent as mere "stochastic parroting."

The real danger is intellectual complacency: judging the technology by its present limitations rather than its trajectory. A senior engineer who dismisses AI coding assistants because "they generate insecure code today" is implicitly betting against their rate of improvement—a risky wager.

The Behaviour to Adopt: Informed Permissibility

We must suspend the reflex to dismiss. This is not blind acceptance; it is recognising the difference between "impossible" and "not ready yet." Watch the trajectory, not just the current snapshot. Let your teams challenge your scepticism. If you catch yourself saying "that will never work," treat it as a cognitive red flag.

🪨 Status-Quo Gravity – Experience becomes an anchor.

🚧 Boundary Blindness – Mistaking engineering limits for universal limits.

Law 2: Defining the Edge

"The only way of discovering the limits of the possible is to venture a little way past them into the impossible."

While the First Law is a warning about human fallibility (specifically among the elderly and distinguished), the Second Law is Clarke's methodology for progress. It challenges the definition of "impossible" itself, distinguishing between things that are fundamentally against the laws of physics (like perpetual motion) and things that are merely beyond our current engineering (like space elevators).

In Profiles of the Future, he presented a chart of future technologies in two columns:

- The Possible: Things that did not violate known physics but were currently out of reach (e.g., high-speed computing, space travel).

- The Impossible: Things that appeared to violate fundamental laws (e.g., anti-gravity, teleportation, time travel).

He wrote the Second Law to justify why serious researchers should still investigate the "Impossible" column. His argument was that we often mistake a technical difficulty for a fundamental limit. We cannot know which is which until we try to build it and fail (or succeed).

Early spaceflight embodied this principle. Engineers did not determine the limits of a Saturn V on a chalkboard—they lit the rocket and discovered them through controlled failure.

The AI Warning

Corporate environments increasingly suffer from "Safetyism": waiting for perfect clarity, perfect governance, or perfect ROI before acting. Yet modern AI capabilities are emergent and undocumented. You cannot know whether an AI agent can support your customer workflow until you attempt to build it.

If we operate only within the "possible"—what is proven today—we stagnate.

The Behaviour to Adopt: Sanctioned Exploration

Establish a sandbox with non-sensitive data, where teams are encouraged to push tools until they break. Exploration only works when it is intentional: set small budgets, fixed timelines, and clear hypotheses, and give teams explicit permission to break things. Create experiments that are safe to fail and study each failure as a map of the boundary. As Clarke would put it: you do not find the edge by imagining it—you find it by watching the system bend.

If engineers are not reporting failures, they are not pushing the boundary. Discovering the limit requires hitting it. Venturing into the "impossible" is how we uncover where the real commercial value lies.

The Second Law connects the others:

- The First warns against expert pessimism.

- The Second shows how to challenge it.

- The Third reveals the consequences of success.

The Second Law shows us the value of stepping into uncharted territory. But once we push past what our intuition can easily grasp, we risk a new error: mistaking complexity for capability. This leads directly to Clarke’s Third Law.

🔐 Access: Who can run experiments

📁 Data: What can enter the sandbox

🧱 Environment: Where tests run safely

📝 Tracking: What must be logged

⚠️ Escalation: When to pause, review, or stop

Law 3: The Black Box Problem

"Any sufficiently advanced technology is indistinguishable from magic."

Clarke added this Third Law more than a decade after the first two, and it remains the one most frequently quoted—and most frequently misunderstood. Its point is not that technology is magic, but that when we don’t understand what we’re looking at, we stop thinking critically and start treating the system as mystical.

A Victorian-era observer holding a smartphone would have no conceptual model for it; they would see sorcery, not circuitry. Modern AI systems create a similar temptation. We no longer program machines step by step—we “prompt” them and receive outputs that appear creative, intuitive, or even uncanny. It is easy to slip into the language of enchantment: spells, incantations, hallucinations, “asking the model for wisdom.”

The AI Warning

But once technology becomes “magic,” it becomes unmanageable.

Recent failures show this clearly. Zillow’s home-buying algorithm operated as a black box: its valuations were opaque even to its creators. When reality diverged from the model’s assumptions—as it inevitably does—Zillow continued to trust the system until it collapsed under its own inaccuracies. Hundreds of millions were lost because leadership ceded judgment to a system they could not interrogate.

This is the real danger of the Third Law in a corporate environment. When leaders treat AI as mysterious, they surrender oversight, governance, and accountability. A black-box recommendation engine can quietly embed bias, inflate risk, or misprice decisions while no one notices—until the damage is irreversible.

The Behaviour to Adopt: Demystification

Your job is to pierce the veil. Reject narrative shortcuts like “the AI decided” or “the model thinks.” Insist on explainability, auditability, and mechanism. Require vendors to show how their systems work, not just what they output. Strip away the mystique until you see the statistics, engineering, and assumptions underneath.

AI is not magic, and treating it like magic is organisational malpractice. When you demystify the tool, fear recedes, control returns, and the real engineering challenges become solvable. If you cannot explain why a model made a decision, it has no business making one on your behalf.

Clarke’s Third Law warns us that when we confuse the appearance of magic with genuine mastery, we lose control of the technology entirely. And in a world where AI is accelerating faster than our intuitions, that loss of control is not an abstraction—it's a business risk. Which brings us to the lessons leaders must carry forward.

🧩 Explainability – Can we understand the decision?

🎛️ Controllability – Can we constrain and override it?

📊 Auditability – Can we inspect data, assumptions, and errors?

🚫 Fail one → Not production-safe.

Clarke's Laws Redux

Clarke’s fiction was generally grounded in hard science, and our approach to AI must be the same.

Law 1: Check your ego: Don’t be the “elderly scientist” who says it can’t be done just because you couldn’t do it yesterday. Experts are most wrong at “impossible.”

Law 2: Push the boundary: You won’t find the value by staying in the safe zone. Sanction the search for the breaking point.

Law 3: Reject the magic: Demand to see the wires. If you can’t explain it, you can’t manage it. If it feels like magic, you’ve already lost control.

Complacency is the real risk. Clarke’s laws remind us that leaders win by challenging assumptions, testing boundaries, and refusing to run their business on black boxes. Treat AI as engineering, not enchantment, and you stay in control of both the opportunity and the risk.

If you are navigating AI uncertainty, I’d love to help—contact me anytime.